This evening I came across a Google Search result for Game of Thrones Audiobooks and my immediate question was how can I download all these .mp3 files at once to my computer. As going through each of those directory and clicking on those files to download was, well a bit too boring and time consuming.

So I gave a thought to it and tried to play with the very famous shell command wget and that’s it! After few trials it gave me what I needed.

Wget Download All Files In Directory Linux

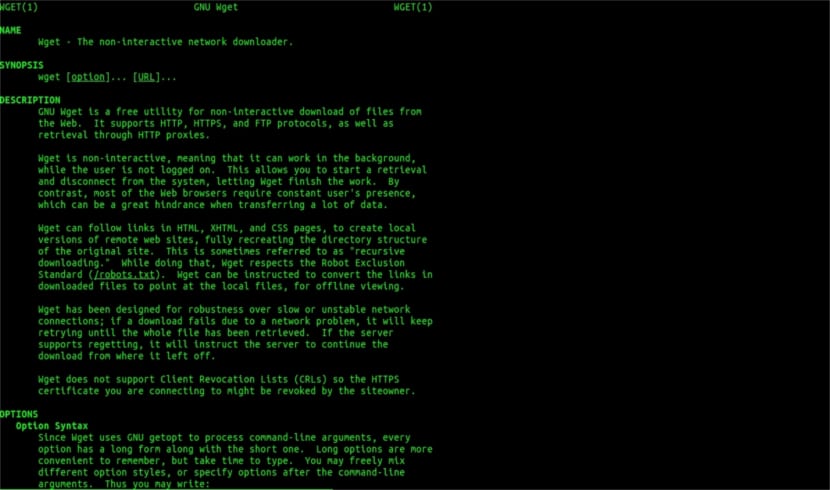

GNU Wget is a command-line utility for downloading files from the web. With Wget, you can download files using HTTP, HTTPS, and FTP protocols. Wget provides a number of options allowing you to download multiple files, resume downloads, limit the bandwidth, recursive downloads, download in the background, mirror a website, and much more. The wget command will put additional strain on the site’s server because it will continuously traverse the links and download files. A good scraper would therefore limit the retrieval rate and also include a wait period between consecutive fetch requests to reduce the server load. Here's the complete wget command that worked for me to download files from a server's directory. Workaround was to notice some 301 redirects and try the new location — given the new URL, wget got all the files in the directory. Improve this answer. Follow edited Aug 23 '17 at 12:47.

Wget A Folder

Explanation with each options

wget: Simple Command to make CURL request and download remote files to our local machine.--execute='robots = off': This will ignore robots.txt file while crawling through pages. It is helpful if you’re not getting all of the files.--mirror: This option will basically mirror the directory structure for the given URL. It’s a shortcut for-N -r -l inf --no-remove-listingwhich means:-N: don’t re-retrieve files unless newer than local-r: specify recursive download-l inf: maximum recursion depth (inf or 0 for infinite)--no-remove-listing: don’t remove ‘.listing’ files

--convert-links: make links in downloaded HTML or CSS point to local files--no-parent: don’t ascend to the parent directory--wait=5: wait 5 seconds between retrievals. So that we don’t thrash the server.<website-url>: This is the website url from where to download the files.

Wget Entire Directory

Happy Downloading